Vision Phase

Overview and Environment

In the vision part, we realize motion detection and facial recognition functionality.

Software support: Python 2.7 + OpenCV 2.4.9

Installation guide can be found HERE .

Motion Detection

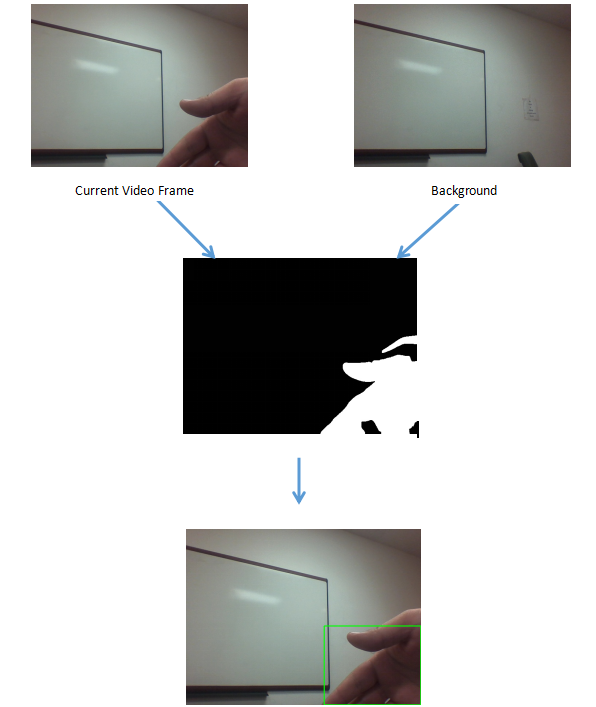

In order to track one moving object in the video frame, we have to set an background as a reference and then compare current video frame with it. Figure 1 can give an intuitive explanation.

Fig.1. The explanation of motion detection

For every video frame, we try to find the differences between it and the background. After that we quantize this the result image to a pure black and white image. Then the object will be all in white color thus we can get the position of the object and bound it with a rectangle.

Since we are doing monitoring, we do not want to get the small object such as a flying bug. To avoid this, we simply set a threshold for the object contour area, if the area occupied by the detected object is large enough, we can say it might be someone in front of our camera.

Facial Recognition

Facial recognition can be achieved under motion detection. Instead of detecting objects in the whole video frame, we now only focus on the central part of the frame. Once an object is detected and this object keeps existing in the central area for 40 frames, which is about 1 or 2 seconds, the facial recognition thread will be triggered.

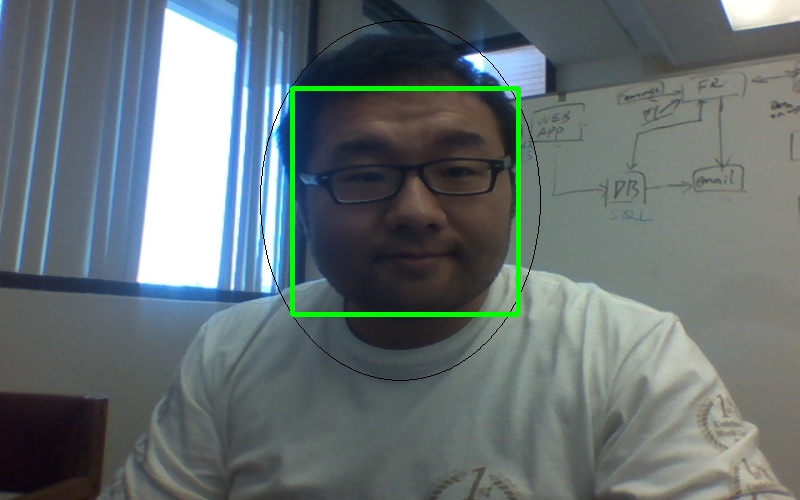

In facial recognition thread, we will try to detect faces in the video frame by calling function detectMultiScale(). This function is provided by OpenCV, we can easily use it. Figure 2 shows one example result of this function.

Fig.2. Result of face detection

Then we can predict the face generated by face detection function and try to recognize who the person is. The recognition process will first get reference images from database and do the training, then predict the id of the detected face as well as a confidence value. This value is a negative value, the greater it is, the less confident our program will be for the result.