Visual Symphony based on DeviceJS Platform

University of Southern California

Designed by Yuan Ma and Qingqing Zheng

Overview

This is the final project for EE579, Wireless and Mobile Networks Design and Laboratory, 2016 Spring. Special thanks to Professor Bhaskar Krishnamachari, TA Pradipta Ghosh and WigWag company.

This project is the interaction of visual art and computer technology. We apply the characteristics of music to the characteristics of filament and call dev$ APIs to control the color and brightness of filament(s). This project can be used as a start point for a music fountain or a prototype for smart home.

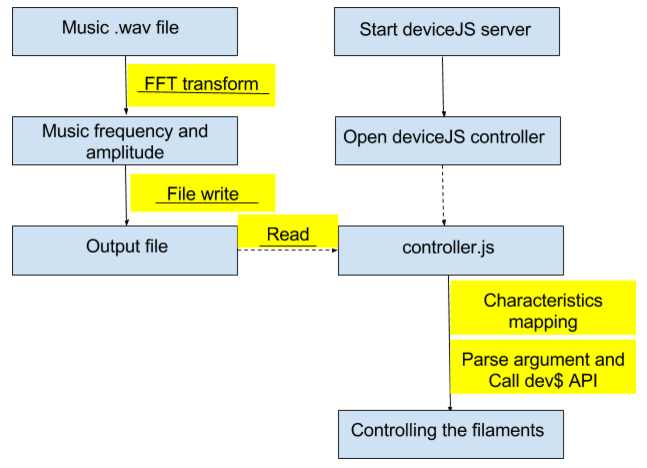

Modules Architecure

There are two parallel process: one is for detectiing frequency and another is for controlling the filaments laid on deviceJS. After reading Muaic.wav file, do the fft transform, then we get the music frequency and amplitude. once we get those, we write them into the outputfile. For the other process, we need firstly start dedviceJs server, running controller on that. Then next, the controller.js will read the output file and map frequency and amplitude into color domain and brightness domain. After we get color value and brightness value, using dev$selectByID API to set the filament color and brightness.

Music Detection Part

Principle

- Frequency and Amplitude detection and extraction from a music file(Pyhton used)

- Save frequency and amplitude as data.txt

- Get frequency and Amplitude, and assign them to values between 0.0-1.0(javascript used)

Code Snippet

while len(data) == chunk*swidth:

stream.write(Data)

indata = np.array(wave.struct.unpack("%dh"%(len(data)/swidth),\

data))*window

fftData=abs(np.fft.rfft(indata))**2

amp=math.sqrt(max(fftData[1:]))

which = fftData[1:].argmax() + 1

if which != len(fftData)-1:

y0,y1,y2 = np.log(fftData[which-1:which+2:])

x1 = (y2 - y0) * .5 / (2 * y1 - y2 - y0)

thefreq = (which+x1)*RATE/chunk

print str(thefreq)+" "+str(amp)

else:

thefreq = which*RATE/chunk

print str(thefreq)+" "+str(amp)

DeviceJS part

Read from data.txt to get the frequency and amplitude of music file. By doing a mathematical calculationa and normalization, map the frequency into color and amplitude into brightness. Then call the devicejs dev$ API to parse the color and brightness to different filaments, in which way we can control the filament.

Principle

- Start the DeviceJS server: devicejs start

- Start the WigWag controller: sudo devicejs run ./

- Run controller.js file to start the controlling process

Code Snippet

if(freq<=0){ color=0.01; }

else if(freq>1050){ color=1.0; }

else{

color=freq/1050;

color=color.toFixed(3); }

if(amp<=0){ brightness=0.15; }

else if (amp>10000000){ brightness =1.0; }

else{

brightness= amp/10000000*1.5;

brightness=brightness.toFixed(3);

if(brightness<0.15)

brightness=0.15

if(brightness>1.0)

brightness=1.0; }

dev$.selectByID('WDFL000012').set('hsl',{h:color,s:1,l:brightness});

Command Line

$:python detector.py seeyouagain.wav > data.txt

$:devicejs start

$:sudo devicejs run ./

$:run controller.js

Experiment Demo 1

The following is the experiment demo when connecting to one filament:

Experiment Demo 2

The following is the experiment demo when connecting to two filaments:

Troubleshooting

The following problems are those we faced when doing the project, and you may encounter some of these problems as well. Please refer to this section to troubleshoot.

- Make sure that you meet all the software and hardware requirement before doing the project, e.g., gcc and g++ version.

- If you cannot run the detector.py and "EOFError" pops up, check if you download the complete .wav music file

- If you want to do some modification of the code or use a different music file, make sure you map the range of frequency and amplitude to the proper range of color and brightness. For wigwag device specifically, the range of color and brightness are both between 0.0 to 1.0.

- If the code is fine and the connection between the computer and the board is fine, but the filament has no reaction, wait for a few second, the network delay between the controller and the filament is quite high, and it may take 10 or more seconds for the controlling packet to be sent to the filament. If the filament still has no reaction for a while, check the controller terminal whether the network connect is still on and whether the controller buffer is overflow or the transmitting mechanism is timeout.

Open Source

ppt slides for the project (click to download)

Software and Hardware Support

- Software support: Python 2.7.3, Node v4.3.1, gcc 4.8.1, g++ 4.8.1

- HardWare support: WigWag devices(filament), USB cable A-Male to B-Male

- Operating system support: Ubuntu 12. or higher

- Platform support: deviceJS + modules

Contact

Email addresses:

Qingqing Zheng: qingqinz@usc.edu

Yuan Ma: mayuan@usc.edu